AdobeStock / macrovector

AdobeStock / macrovector

The future is already here

Artificial intelligence and social security – potential, opportunities, limitations

ed* Nr. 01/2019 – Chapter 2

The technological development of artificial intelligence (AI) and its potential use appear to be difficult to assess at present. One thing is certain: in the future, algorithms will increasingly assist people in making decisions or will be able to make them themselves. This raises questions about how to control them effectively and about a legal and ethical framework – especially when AI-supported decisions have a direct impact on people.

AI – no longer a dream, it’s reality

The topic of artificial intelligence is currently high on the agenda at both European and national level. In April 2018, 25 European countries, including Germany, declared that they would cooperate on the most important issues relating to AI. A further four countries have since followed. The focus is on how to ensure Europe’s competitiveness in the research and use of AI, as well as on social, economic, ethical and legal aspects of AI. At the same time, the Commission presented its Communication on ‘Artificial Intelligence for Europe’.1 The initiative is based on three elements. Firstly, boosting the EU’s technological and industrial performance and the uptake of AI across the economy, both in the private and public sectors. Secondly, preparing for the socio-economic changes resulting from AI. Thirdly, ensuring an appropriate ethical and legal framework based on the values of the Union and consistent with the Charter of Fundamental Rights of the European Union. Member States are invited to draw up national AI strategies or programmes by mid-2019.

Germany published its ‘Artificial Intelligence Strategy’ in November 2018. As part of its strategy, the Federal Government also wants to check for gaps and loopholes in the existing legal framework with regard to algorithm-based and AI-based decisions, services and products and adapt them where necessary.2 This is because it believes that the increased number

of decisions made by AI affects political, cultural, legal and ethical issues. Federal Justice Minister Katarina Barley and Federal Labour Minister Hubertus Heil explained that clearer rules and legal certainty are needed

if algorithms are to prepare or take over an increasing number of tasks and decisions for people. Otherwise there will be no trust in the applications of AI.3

What is artificial intelligence?

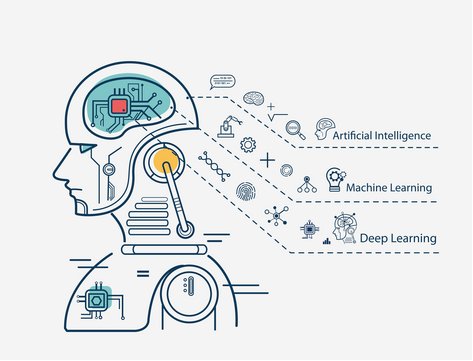

Artificial intelligence has a long history. In a test he developed in 1950, mathematician Alan Turing described a system as intelligent if its answers and reactions are indistinguishable from those of a human being.4 However, after more than 60 years of research, there does not seem to be a uniform definition of AI. One reason for this could be that AI is an open term that refers to a wide range of products and applications.5 The European Commission’s High-Level Expert Group on Artificial Intelligence developed a working definition of AI in April 2018: ‘Artificial intelligence (AI) refers to systems that display intelligent behaviour by analysing their environment and taking actions – with some degree of autonomy – to achieve specific goals. AI-based systems can be purely software-based, acting in the virtual world (e.g. voice assistants, image analysis software, search engines, speech and face recognition systems) or AI can be embedded in hardware devices (e.g. advanced robots, autonomous cars, drones or Internet of Things applications).’6 AI can be divided into two forms: strong (general) AI and weak (narrow) AI. Strong AI refers to systems that are intended to have the same intellectual abilities as humans, whereas weak AIs are systems that have been programmed to perform a few specific tasks.