pixabay/tungnguyen

pixabay/tungnguyen

The AI Act:

Europe’s path to the digital future

ed* No. 02/2024 – Chapter 2

The EU has set itself the task of tapping the full potential of AI and promoting innovations in this area, while ensuring its safe and reliable application. The EU’s need for regulation arises from the risks that certain AI systems pose for the safety and protection of the fundamental rights of their users, not least due to a lack of transparency. This can lead to a lack of trust, legal uncertainty and the slower use of AI in many areas. Above all, the unregulated use of AI runs the risk of exacerbating discrimination in the analogue world. Such potentially unlawful disadvantages are a particularly great challenge when using AI in the administration of public services, and therefore also in the area of social insurance.

When regulating AI, the EU pursues a value-based approach that goes back to the European Declaration on Digital Rights and Principles for the Digital Decade1. The Declaration, signed by the President of the European Commission, the President of the European Parliament and the President of the Council of the EU in 2022, contains guidelines for human-centric, trustworthy and ethical AI. According to the Declaration, AI systems should not be used to anticipate human decisions regarding health and employment, among other things. Even before the signing of the Declaration, the European Commission had already begun an extensive and comprehensive consultation process for an AI Act, for which it finally presented a proposal in 2021.

World’s first regulation of AI

The Regulation laying down harmonised rules on artificial intelligence – the so-called AI Act – is a central component of a broader package of EU measures to support trustworthy AI. It is intended to strike a balance between ensuring the safety and fundamental rights of people and companies, and promoting investment and innovation in the field of AI. The AI Act came into force on 1 August 2024, following difficult and lengthy negotiations between the European Parliament and the Council of the EU. It applies across all sectors in order to prevent competing legislation in certain areas. Under the AI Act, AI systems are defined as machine-based systems that work autonomously to a certain extent and derive from received input predictions, recommendations or decisions, depending on the area of application.

Regulation of AI - an international discussion

For quite some time now, the regulation of Al has been under discussion not just in the EU but worldwide. In 2019, the Organisation for Economic Co-operation and Development (OECD) laid down the first intergovernmental standards in this area with a recommendation on Al. The recommendation contains principles including respect for human rights and democratic values as well as transparency and traceability and the guarantee of legal responsibility by the users, developers and administrators of AI systems. The principles of the G20 states on AI formulated in Osaka in the same year were derived from this recommendation. The OECD AI recommendation was revised andadapted in 2023 and 2024.

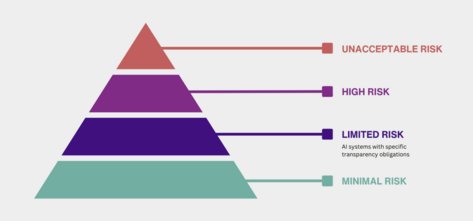

The AI Act aims to strike a balance between a high level of protection and the promotion of innovation through a risk-based approach. This stipulates that AI systems are first assessed and categorised into four classes, depending on their risk potential: unacceptable risk, high risk, limited risk and minimal risk. The higher the risk assessed in the application, the stricter the regulations imposed that providers and operators of the AI systems concerned must comply with. Authorities or other public organisations that use an AI system under their own responsibility must also comply with this. The EU regulations range from labelling and compliance with documentation and due diligence obligations to a complete ban on unauthorised applications. The intensity of regulation therefore depends on the risk associated with the use of the respective AI system.

Focus on AI systems with high risk

Accordingly, the AI Regulation focuses on the two highest risk classes. Only limited transparency and information obligations apply to low-risk applications. In contrast, AI systems that pose an unacceptable risk will be banned. These include, for instance, emotion recognition systems in the workplace and in schools, social classifications of people based on behaviour, socio-economic status and personal characteristics (social scoring) and the biometric identification and categorisation of natural persons.

So-called high-risk AI systems, which pose a high risk to the health and safety or fundamental rights of natural persons under the AI Act, are also heavily regulated. This includes AI systems that are used in the areas of accessibility and provision of essential private and public services. This includes, among others, health services and social services that provide protection in cases such as maternity, illness, accidents at work, the need for care or old age. Systems that are used for risk assessment and pricing in relation to life and health insurances are also categorised as high-risk AI systems. Although this does not apply to statutory health insurance, it certainly applies to private health insurance.

Risk pyramid

Any AI system used to determine whether, for example, health services, social security benefits and social services should be granted or denied can have a significant impact on people’s livelihoods and might violate their fundamental rights such as the right to social protection, non-discrimination and human dignity. The regulations for these systems are comprehensive in line with the high risk they pose. For example, providers and operators of these systems must set up a risk management system, meet quality management and information obligations, ensure the accuracy, reliability and security of the system and continually monitor and, if necessary, correct the system. Failure to comply with the requirements of the AI Regulation will result in significant penalties.